Introduction

Text generation is a branch of artificial intelligence (AI) and natural language processing (NLP) that involves the automatic production of coherent and contextually relevant text. With the evolution of machine learning techniques, especially deep learning, text generation has gained significant traction in recent years. This report aims to elucidate the mechanisms, applications, challenges, and future directions of text generation technologies.

Understanding Text Generation

Text generation can be defined as the process of using computational algorithms to generate human-like text based on input data, rules, or learned patterns. This field leverages statistical methods, machine learning, and deep learning to accomplish its goals.

Techniques in Text Generation

- Rule-Based Systems: These early systems rely on a predefined set of grammatical rules and templates. While they can create text that adheres to specific formats, their inability to produce truly novel content limits their effectiveness.

- Statistical Language Models: These models analyze the statistical properties of language. N-grams, a simple yet effective example, predict the probability of a sequence of words based on the frequency of word occurrences in a given dataset.

- Machine Learning Models: Machine learning algorithms, particularly during the early 2000s, started to be employed for text generation. Techniques such as Markov Chains enabled the generation of somewhat coherent text based on learned structures from the training corpus.

- Deep Learning Models: The introduction of neural networks has revolutionized text generation. Models like Recurrent Neural Networks (RNN), Long Short-Term Memory networks (LSTM), and Gated Recurrent Units (GRU) have shown remarkable ability to handle sequences, including text.

- Transformers and Pre-trained Models: With the advent of transformative architectures like the Transformer, models such as GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers) have set new standards in text generation performances. Models like OpenAI's GPT-3 possess the ability to generate human-like text, engage in dialogue, summarize information, and even translate languages with impressive accuracy.

Applications of Text Generation

Text generation has applications across various sectors, demonstrating its versatility and adaptability.

- Content Creation: Automated content generation for blogs, news articles, and marketing copy is among the most prominent applications. AI tools can produce articles in mere seconds, significantly reducing the time and effort required for content generation.

- Conversational Agents: Chatbots and virtual assistants use text generation capabilities to interact with users, providing information, resolving queries, and enhancing user experiences.

- Creative Writing: AI-driven creativity extends to poetry and story generation, where models can co-create content with human authors, offering inspiration and alternative narrative paths.

- Code Generation: AI is also entering the realm of programming by generating code snippets based on user requirements, leveraging learned patterns from extensive codebases.

- Translation Services: Text generation underpins automated translation systems, allowing for the generation of coherent translations that maintain the original text's intent.

- Personalized Recommendations: Text generation is employed to generate tailored content for users, such as personalized emails or product descriptions, enhancing user engagement.

Challenges in Text Generation

While text generation technology has advanced significantly, several challenges persist:

- Coherence and Context: Maintaining coherence over longer texts remains a hurdle. Generating paragraphs that logically flow and relate to each other is challenging, often leading to disjointed outputs.

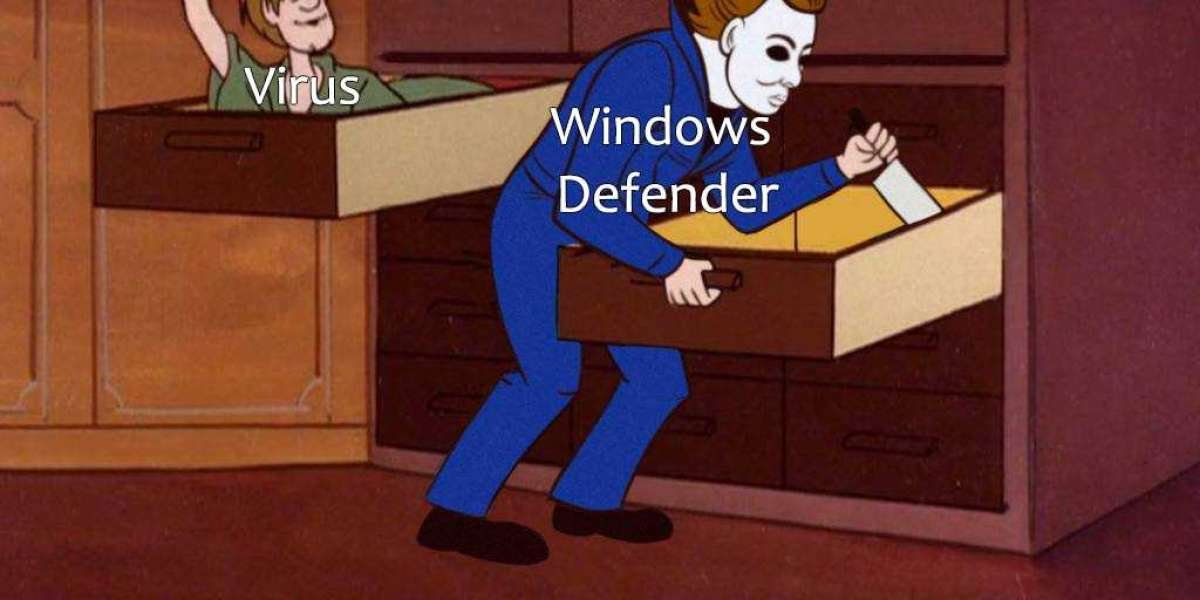

- Bias and Ethical Concerns: AI models are trained on existing data, which can contain biases. This means that generated text can inadvertently perpetuate stereotypes or misinformation. Addressing these biases while ensuring ethical use of AI-generated content is critical.

- Understanding Nuance: Subtleties of language, such as sarcasm or cultural references, are difficult for AI models to grasp. As a result, some generated text may lack the understanding necessary for human-like expression.

- Dependence on Data Quality: The quality of the generated text heavily relies on the quality of the training data. Poorly curated datasets can lead to inaccurate or nonsensical outputs.

- Lack of Creativity: Although models can mimic creative writing, true originality remains elusive. AI-generated content is often based on learned patterns rather than genuine human creativity.

Evaluation of Text Generation

Evaluating the quality of generated text poses a significant challenge. Traditionally, several metrics have been employed:

- BLEU (Bilingual Evaluation Understudy): A metric often used in machine translation to assess the overlap between generated text and reference text.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): ROUGE scores measure the overlap of n-grams between generated text and reference text, particularly in summarization tasks.

- METEOR (Metric for Evaluation of Translation with Explicit ORdering): This metric considers synonyms and word stems, providing a more nuanced evaluation of text quality.

- Human Evaluation: Ultimately, human judges are often used to assess the coherence, relevance, and creativity of the generated content, providing qualitative feedback that quantitative metrics may miss.

Future Directions

The future of text generation holds several promising trends:

- Improved Model Architectures: Research is ongoing to develop more advanced architectures that better understand context, leading to more coherent and relevant text generation.

- Personalization: Future models will likely incorporate more user-specific data to generate personalized content, Enhancing ChatGPT With custom Datasets user engagement and satisfaction.

- Interactivity and Multimodality: Text generation will increasingly integrate with other modalities, such as images and audio, creating richer user experiences through interactive storytelling across various media.

- Improving Ethical Standards: The conversation around biases and ethical implications will continue to grow, leading to the development of better guidelines and tools to ensure responsible AI use.

- Artificial Creativity: Research into enabling AI to generate genuinely creative content through innovative techniques will continue, pushing the boundaries of what AI can accomplish.

Conclusion

Text generation is a dynamic and multifaceted field that has captivated researchers and industries alike. By harnessing the power of AI, text generation technologies have revolutionized the way we interact with machines, enhancing content creation, communication, and numerous other applications. While there are challenges to overcome, including issues relating to coherence, bias, and creativity, the future of text generation promises exciting developments that could redefine our understanding of artificial intelligence and its capabilities in human-like communication. As we move forward, it will be critical to prioritise ethical AI use while striving to enhance the quality and creativity of generated text.